Blog and News > techy-stuff > Combating Data Bias in AI

Combating Data Bias in AI

If you’ve spent the last 4 months of lockdown 3.0 in a long-term relationship with your Netflix account, you’ll have heard of the new docufilm ‘Coded Bias’.

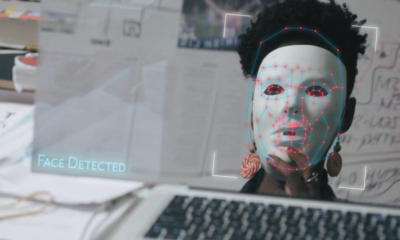

Coded Bias explores the staggering ways Artificial Intelligence has infiltrated our lives. Yet the most shocking thing is, for something that’s so deep-rooted in our day-to-day routine, why is it submerged in exclusivity and bias?

This is what filmmaker Joy Buolamwini, a computer scientist and founder of the Algorithmic Justice League, has set out to uncover, after she noticed that facial recognition systems weren't trained to recognize darker-skinned faces.

Coincidentally, Facebook announced today that it has open-sourced a dataset designed to combat age, gender, and skin-tone biases in AI learning models.

This is certainly a step in the right direction. But where does the industry go next?

Algorithm-writing

Obviously, an AI system doesn’t wake up one day and decide it’s going to be inherently prejudiced. It starts with the software’s algorithm-writing, and the lack of understanding surrounding societal issues and imperfect data.

This is partly due to the ongoing flawed demographics of the tech industry. When you think of it’s biggest players and stakeholders, the majority are white males.

How can an industry dominated by one party of society speak on behalf of everyone?

AI can only move forward when under-represented groups of society can come together to work on it’s development and use. After all, we all take part in it’s deployment, so it only makes sense to get involved.

Buolamwini discusses this as Coded Bias dives into how automation imposes and perpetuates ready-made injustices regarding racism, sexism, and capitalism.

Surely once a technology is broadly accepted by the people affected by their use, it can then be launched to market. It’s quite shocking that this isn’t the current state-of-play as it stands.

So why is it? The problem is that as a society, we’re so used to trusting technology as a system that is much more powerful and intelligent than we are as humans. This is true to a certain extent, but it is definitely not an excuse to deploy systemically oppressive models at scale.

We must challenge the power structure of those writing code rather than those who have code imposed on them.

Ethical thinking

When it comes to AI, we want it to work like a human brain, without giving it the characteristics of a human brain.

Each human has ethical thinking embedded into their thought processes. Whether they choose to use it or not is another matter, but the beauty of AI is that we can teach it to do what we want.

That’s why companies developing AI systems must consider the process of ethical thinking into its design, considering a range of consequences and remedies as a result.

Of course many tech companies are doing this already. Whether that’s signing up to industry codes of practice or actively working transparency and accountability, there’s something for everyone to learn.

Yet it’s interesting that one of the biggest ethical risks to AI machinery, particularly when it comes to face-recognition, is the idea of creating a ‘Big Brother-type’ dystopia.

As technology continues to advance, it’s becoming easier to detect specific individuals from a large crowd of people without their knowledge or permission.

This of course is in development under the guise of safety, but how can it keep society safe when it marginalises large swathes of society?

Ultimately, should any machine learning algorithms be making autonomous decisions, we as an industry be able to explain and understand it.

Broader regulation

As the world of AI continues to grow, so does the legal and regulatory environment in which it exists.

Why? Because it’s data that drives an AI system.

Since it’s high quality datasets that are needed for AI to work properly, companies are using an increasing amount of data with or without the knowledge of their users. Generally, people like to know how and when their data is being used.

In order for people to trust the system, it must be fair and representative of all. To do so, it would be a beneficial practice to run regular independent audits and testing.

Although there’s only so far testing can take you. So perhaps more importantly, companies should spend time engaging in conversations around human bias to mitigate it happening in the first place.

As technology advances, we can start to hold it accountable to the same standards as we hold humans.

This could be as simple as running AI algorithms alongside its human decision makers. In doing so, results can be compared and use explainability techniques to understand how a decision was reached, and why there may be differences.

Then when bias is uncovered, it is not enough to simply change the algorithm and move on. Business owners and technological leaders should also work to improve the human-driven processes underlying it.

Conclusion

None of this is to say that AI isn’t a worthwhile system, it has many potential benefits for businesses, the economy and for challenging society’s downfalls.

But this will only be possible if people trust these systems to produce unbiased results. This is only capable of happening if humans work together to tackle bias in AI as opposed to blaming it.